I am grateful to the National Science Foundation (NSF) and its division of Computer and Network Systems (CNS) for supporting my CAREER project which started in February 2022. This webpage is dedicated to documenting all details of this project.

NSF CNS-2146171

CAREER: From Federated to Fog Learning: Expanding the Frontier of Model Training in Heterogeneous Networks

Synopsis

Today’s networked systems are undergoing fundamental transformations as the number of Internet connected devices continues to scale. Fueled by the volumes of data generated, there has been in parallel a rise in complexity of machine learning (ML) algorithms envisioned for edge intelligence, and a desire to provide this intelligence in near real-time. However, contemporary techniques for distributing ML model training encounter critical performance issues due to two salient properties of the wireless edge: (1) heterogeneity in device communication/computation resources and (2) statistical diversity across locally collected datasets. These properties are further exacerbated when considering the geographic scale of the Internet of Things (IoT), where the cloud may be coordinating millions of heterogeneous devices.

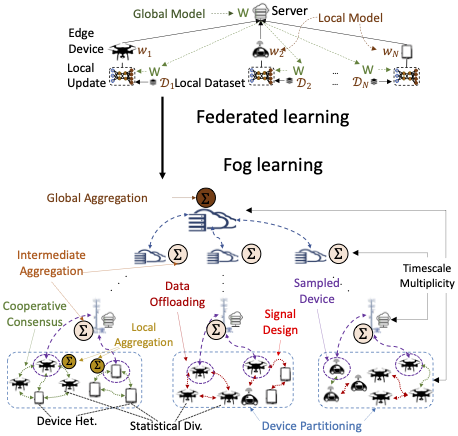

This project is establishing the foundation for fog learning, a new model training paradigm that orchestrates computing resources across the cloud-to-things continuum to elevate and optimize over the fundamental model learning vs. resource efficiency tradeoff. The driving principle of fog learning is to intelligently distribute federated model aggregations throughout a multi-layer network hierarchy. The proliferation of device-to-device (D2D) communications in wireless protocols including 5G-and-beyond will act as a substrate for inexpensive local synchronization of datasets and model updates.

This project is leading to a concrete understanding of the fundamental relationships between contemporary fog network architectures and ML model training. The orchestration of device-level, fog-level, and cloud-level decision-making will expand the limits of distributed training performance under resource heterogeneity and statistical diversity. In addition to its focus on optimizing ML training through networks, this project is developing innovative ML techniques leveraging domain knowledge to support and enhance these optimizations at scale.

This project has both technical and educational broader impacts. The elevated model learning vs. resource efficiency tradeoff achieved will result in lower mobile energy consumption from emerging edge intelligence tasks and better quality of experience for end users. Further, the results of this project will motivate new research directions in (1) ML, based on heterogeneous system constraints, and (2) distributed computing for other applications. The educational broader impacts will promote the importance of data-driven optimization for/by network systems. They are being achieved through new undergraduate and graduate courses with personalized learning modules on specific topics.

Personnel

The following individuals are the current main personnel involved in the project:

- Christopher G. Brinton, Principal Investigator, Purdue University

- Tom (Wenzhi) Fang, Graduate Student, Purdue University

- Evan Chen, Graduate Student, Purdue University

- Dong-Jun Han, Postdoctoral Research Associate, Purdue University

Collaborators

I am thankful to have several collaborators on both the research and education development efforts:

- Ali Alipour (Seyyedali Hosseinalipour), Assistant Professor, University at Buffalo

- Taejoon Kim, Associate Professor, University of Kansas

- Andrew S. Lan, Assistant Professor, University of Massachusetts Amherst

- David J. Love, Nick Trbovich Professor, Purdue University

- Nicolo Michelusi, Associate Professor, Arizona State University

- Rohit Parasnis, Postdoctoral Researcher, Massachusetts Institute of Technology

- Rajeev Sahay, Assistant Teaching Professor, University of California San Diego

- Henry (Su) Wang, Postdoctoral Researcher, Princeton University

Publications

The following is a list of publications produced since the start of the project, ordered chronologically:

- J. Kim, S. Hosseinalipour, A. Marcum, T. Kim, D. Love, C. Brinton. Learning-Based Adaptive IRS Control with Limited Feedback Codebooks. IEEE Transactions on Wireless Communications, 2022.

- D. Nguyen, S. Hosseinalipour, D. Love, P. Pathirana, C. Brinton. Latency Optimization for Blockchain Empowered Federated Learning in Multi-Server Edge Computing. IEEE Journal on Selected Areas in Communications, 2022.

- J. Kim, S. Hosseinalipour, T. Kim, D. Love, C. Brinton. Linear Coding for Gaussian Two-Way Channels. Annual Allerton Conference on Communication, Control, and Computing (Allerton), 2022.

- Y. Chu, S. Hosseinalipour, E. Tenorio, L. Cruz, K. Douglas, A. Lan, C. Brinton. Mitigating Biases in Student Performance Prediction via Attention-Based Personalized Federated Learning. Conference on Information and Knowledge Management (CIKM), 2022.

- N. Yang, S. Wang, M. Chen, C. Brinton, C. Yin, W. Saad, S. Cui. Model-Based Reinforcement Learning for Quantized Federated Learning Performance Optimization. IEEE Global Communications Conference (Globecom), 2022.

- S. Wang, S. Hosseinalipour, M. Gorlatova, C. Brinton, M. Chiang. UAV-assisted Online Machine Learning over Multi-Tiered Networks: A Hierarchical Nested Personalized Federated Learning Approach IEEE Transactions on Network and Service Management, 2022.

- D. Han, D. Kim, M. Choi, C. Brinton, J. Moon. SplitGP: Achieving Both Generalization and Personalization in Federated Learning. IEEE International Conference on Computer Communications (INFOCOM), 2023.

- S. Wang, R. Sahay, C. Brinton. How Potent are Evasion Attacks for Poisoning Federated Learning-Based Signal Classifiers? IEEE International Conference on Communications (ICC), 2023.

- Z. Zhou, S. Azam, C. Brinton, D. Inouye. Efficient Federated Domain Translation. International Conference on Learning Representations (ICLR), 2023.

- D. Kushwaha, S. Redhu, C. Brinton, R. Hedge. Optimal Device Selection in Federated Learning for Resource Constrained Edge Networks. IEEE Internet of Things Journal, 2023.

- B. Velasevic, R. Parasnis, C. Brinton, N. Azizan. On the Effects of Data Heterogeneity on the Convergence Rates of Distributed Linear System Solvers. IEEE Conference on Decision and Control (CDC), 2023.

- G. Lan, H. Wang, J. Anderson, C. Brinton, V. Aggarwal. Improved Communication Efficiency in Federated Natural Policy Gradient via ADMM-based Gradient Updates. Conference on Neural Information Processing Systems (NeurIPS), 2023.

- A. Das, A. Ramamoorthy, D. Love, C. Brinton. Distributed Matrix Computations with Low-weight Encodings. IEEE Journal on Selected Areas in Information Theory, 2023.

- B. Ganguly, S. Hosseinalipour, K. Kim, C. Brinton, V. Aggarwal, D. Love, M. Chiang. Multi-Edge Server-Assisted Dynamic Federated Learning with an Optimized Floating Aggregation Point. IEEE/ACM Transactions on Networking, 2023.

- S. Wang, S. Hosseinalipour, V. Aggarwal, C. Brinton, D. Love, W. Su, M. Chiang. Toward Cooperative Federated Learning over Heterogeneous Edge/Fog Networks. IEEE Communications Magazine, 2023.

- R. Sahay, M. Zhang, D. Love, C. Brinton. Defending Adversarial Attacks on Deep Learning Based Power Allocation in Massive MIMO Using Denoising Autoencoders. IEEE Transactions on Cognitive Communications and Networking, 2023.

- M. Oh, S. Hosseinalipour, T. Kim, D. Love, J. Krogmeier, C. Brinton. Dynamic and Robust Sensor Selection Strategies for Wireless Positioning with TOA/RSS Measurement. IEEE Transactions on Vehicular Technologies, 2023.

- J. Kim, T. Kim, D. Love, C. Brinton. Robust Non-Linear Feedback Coding via Power-Constrained Deep Learning. International Conference on Machine Learning (ICML), 2023.

- E. Ruzomberka, D. Love, C. Brinton, A. Gupta, C. Wang, H. V. Poor. Challenges and Opportunities for Beyond-5G Wireless Security. IEEE Security and Privacy, 2023.

- M. Oh, A. Das, S. Hosseinalipour, T. Kim, D. Love, C. Brinton. A Decentralized Pilot Assignment Algorithm for Scalable O-RAN Cell-Free Massive MIMO. IEEE Journal on Selected Areas in Communications, 2024.

- S. Hosseinalipour, S. Wang, N. Michelusi, V. Aggarwal, C. Brinton, D. Love, M. Chiang. Parallel Successive Learning for Dynamic Distributed Model Training over Heterogeneous Wireless Networks. IEEE/ACM Transactions on Networking, 2024.

- E. Chen, S. Wang, C. Brinton. Taming Subnet-Drift in D2D-Enabled Fog Learning: A Hierarchical Gradient Tracking Approach. IEEE International Conference on Computer Communications (INFOCOM), 2024.

- S. Wang, S. Hosseinalipour, C. Brinton. Multi-Source to Multi-Target Decentralized Federated Domain Adaptation. IEEE Transactions on Cognitive Communications and Networking, 2024.

GitHub Code Repositories

The following is a list of Github repositories created based on the research efforts in this project:

- UAV-assisted Online Machine Learning over Multi-Tiered Networks

- Semi-Decentralized Fog Learning

- Gradient Tracking in Semi-Decentralized Fog Learning

- Physical-Layer Coding in Fog Learning

- Adversarial Attacks in Fog Learning

- Domain Adaptation in Fog Learning

Educational Activities

The following educational activities have been undertaken as part of the integrated research and education plan of the project:

- ECE 301: Signals and Systems: This course has been taught twice by the PI at Purdue, in fall 2022 and spring 2023. An undergraduate student from the fall 2022 offering, Adam Piaseczny, has been consistently engaged in research on fog learning, and will present his resulting first-authored paper at IEEE ICC in 2024.

- ECE 60022: Wireless Communication Networks: The PI taught this course at Purdue in spring 2022. It includes modules on the fundamentals of data-driven and machine learning-driven services delivered over wireless networks.

- HON 399: Principles of Networks: The PI co-created this course at Purdue, intended for an interdisciplinary audience. Based on his book, its first offering was in fall 2023. In the course project, teams of students from humanities, business, science, and engineering majors worked together on contemporary networking problems.

- EPICS: Harnessing the Data Revolution: The PI has co-led an Engineering Projects in Community Service (EPICS) team at Purdue each semester since fall 2021. This team is focused on providing data science solutions to Native American tribes in the Dakotas.

- Personalized Education: The team is involved in a Purdue University-wide initiative on developing analytical tools for improving engagement in first year online courses. We have tailored the federated learning solutions developed in this project to improve the quality of analytics provided to students from underrepresented groups.

Outreach Activities

The following outreach activities have been undertaken in the project:

- FOGML Workshop: The PI has been co-organizing an annual workshop (2021, 2023, 2024) on Distributed Machine Learning and Fog Networks (FOGML), in conjunction with IEEE INFOCOM.

- Quantum Summer School: The PI has been delivering annual lectures at Purdue's Quantum Summer School on the basic principles of fog learning, highlighting synergies with quantum computing, through Purdue's Quantum Science and Engineering Institute.